Introduction:

Virtual Reality (VR) has revolutionized the field of CAD (Computer-Aided Design) and 3D modeling, providing designers and engineers with immersive and interactive experiences. This technical article delves into making VR design platforms for CAD and 3D modeling. We explore the software tools, techniques, and algorithms employed to create these platforms, enabling users to visualize, manipulate, and iterate on their designs in a virtual environment.

Software Tools for VR Design Platforms:

1. CAD and 3D modeling Software: The foundation of VR design platforms lies in robust CAD and 3D modeling software. These tools, such as AutoCAD, SolidWorks, or Rhino, enable users to create precise and detailed 3D models of their designs. The software provides features for geometry creation, surface modeling, parametric design, and assembly management, ensuring accuracy and flexibility in the virtual environment.

2. Virtual Reality Engines and SDKs: To bring CAD and 3D models into the VR realm, virtual reality engines and software development kits (SDKs) play a crucial role. Engines like Unity or Unreal Engine provide the framework for creating interactive virtual environments and integrating VR capabilities. SDKs such as the Oculus SDK, SteamVR, or OpenVR offer APIs and tools for headset integration, tracking, input handling, and rendering, enabling seamless VR experiences.

Techniques for VR Design Platforms:

1. Visualisation and Rendering Techniques: To create realistic and immersive VR experiences, visualization and rendering techniques are employed. These techniques, such as ray tracing, global illumination, and ambient occlusion, enhance the quality of the virtual environment by simulating realistic lighting, shadows, reflections, and materials. High-fidelity rendering techniques like physically-based rendering (PBR) ensure accurate representation of materials and textures, resulting in a more realistic and engaging VR experience.

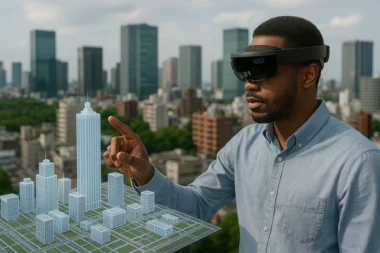

2. Gesture-Based Interaction: VR design platforms leverage gesture-based interaction techniques to enable users to interact with their CAD and 3D models. Hand-tracking technology and controllers capture the user’s hand movements and gestures, allowing them to manipulate and navigate the virtual environment. Techniques like gesture recognition, physics-based interactions, and object manipulation algorithms provide intuitive and natural interaction with the designs.

3. Spatial Audio: Spatial audio techniques enhance the immersive experience on VR design platforms. These techniques simulate realistic audio environments, where sound sources are positioned and rendered based on their spatial location within the virtual space. Users can perceive sound coming from different directions, distances, and angles, providing a more immersive and realistic audio experience.

How does it work?

VR design platforms for CAD and 3D modeling work by integrating software, hardware, and immersive technologies to create virtual environments where users can interact with their designs. Here’s a step-by-step overview of how these platforms typically work:

1. Design Creation: The design process begins with creating 3D models using CAD software or 3D modeling tools. Designers use these software applications to create detailed and accurate representations of objects, structures, or spaces.

2. Export to the VR Platform: Once the 3D models are created, they are exported or imported into the VR design platform. This platform acts as the interface between the CAD/3D modeling software and the virtual reality environment.

3. Virtual Reality Integration: The VR design platform integrates with virtual reality engines and SDKs, such as Unity or Unreal Engine, to provide the necessary framework for creating immersive virtual environments. These engines handle rendering, tracking, and user interaction, among other functionalities.

4. Hardware Setup: Users wear VR headsets, such as the Oculus Rift, HTC Vive, or Windows Mixed Reality devices, which are connected to powerful computers capable of running VR applications. The headsets provide visual and audio feedback, immersing the user in the virtual environment.

5. Tracking and Controllers: VR design platforms utilize tracking systems to capture the user’s real-time movements and position. This can be achieved through sensors, cameras, or laser-based tracking systems. Additionally, hand controllers or gesture recognition technology allow users to interact with the virtual objects and navigate the virtual space.

6. Visualisation and Interaction: Users can visualize their 3D models at scale and from different angles once inside the virtual environment. They can manipulate, scale, rotate, and translate objects using hand controllers or gestures, enabling intuitive and immersive interaction with the designs. Some platforms may also support voice commands or eye-tracking technology for further interaction.

7. Realistic Rendering and Feedback: VR design platforms employ advanced rendering techniques to create realistic visuals, including lighting effects, shadows, reflections, and materials. This enhances the visual fidelity of the designs, providing a more accurate representation of how they would appear in the real world. Haptic feedback may also be incorporated to provide users with tactile sensations, further enhancing the immersive experience.

8. Collaboration and Review: Many VR design platforms offer collaboration features, allowing multiple users to join the same virtual environment simultaneously. This enables teams to review and discuss designs regardless of location. Users can annotate, mark up, and make real-time plan changes, fostering efficient collaboration and communication.

9. Iteration and Visualisation: Users can iterate on their designs within the virtual environment, making modifications, testing different configurations, or exploring alternative options. This iterative process allows for quicker design iterations and facilitates better decision-making by allowing users to experience the designs in a realistic and immersive manner.

10. Output and Integration: Once the design is finalized in the virtual environment, it can be exported back to the original CAD or 3D modeling software for further refinement or integration into the production pipeline.

Algorithms for VR Design Platforms:

1. Collision Detection: The algorithms handle interactions between virtual objects and the user’s input. These algorithms calculate intersections, contact points, and distances between objects, allowing for accurate detection of collisions and preventing objects from intersecting or overlapping. This ensures that users can interact with the CAD and 3D models realistically and precisely.

2. Level of Detail (LOD) Techniques: LOD techniques optimize the rendering performance of VR design platforms. These algorithms dynamically adjust the level of detail of 3D models based on the user’s viewing distance and focus. These techniques optimize rendering performance while maintaining visual quality and ensuring smooth interactions in the virtual environment by reducing the level of detail for objects far away or not in the same direction.

3. Model Optimisation: Model optimization algorithms streamline the CAD and 3D models for VR rendering. These algorithms simplify the geometry, reduce the polygon count, and optimize the texture maps to improve performance without sacrificing visual quality. Techniques like mesh decimation, texture compression, and LOD generation are employed to optimize the models for real-time rendering in VR.

Conclusion:

VR design platforms for CAD and 3D modeling leverage software tools, techniques, and algorithms to create immersive and interactive virtual experiences. Through the integration of CAD and 3D modeling software, virtual reality engines, and SDKs, these platforms enable designers and engineers to visualize and manipulate their designs in a virtual environment. VR design platforms offer realistic and efficient CAD and 3D modeling workflows using visualization and rendering techniques, gesture-based interaction, spatial audio, and algorithms for collision detection, level of detail (LOD), and model optimization. This opens up new possibilities for design innovation and exploration.